Ecuación de Laplace con Condiciones de Dirichlet: Discretización Finita y Resolución por Jacobi & Gauss-Seidel

Ecuación de Laplace

...

Condicion de Frontera

Discretización

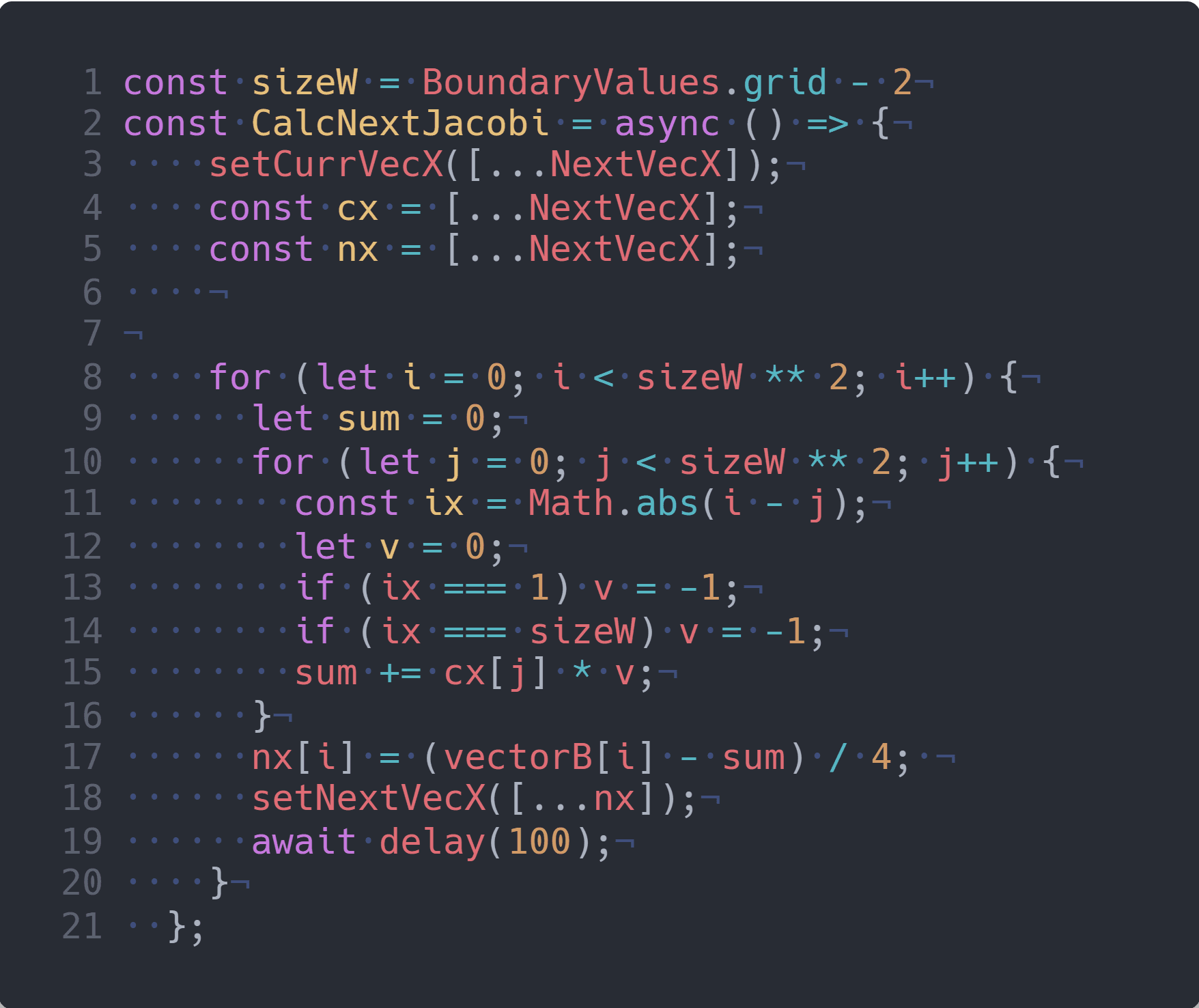

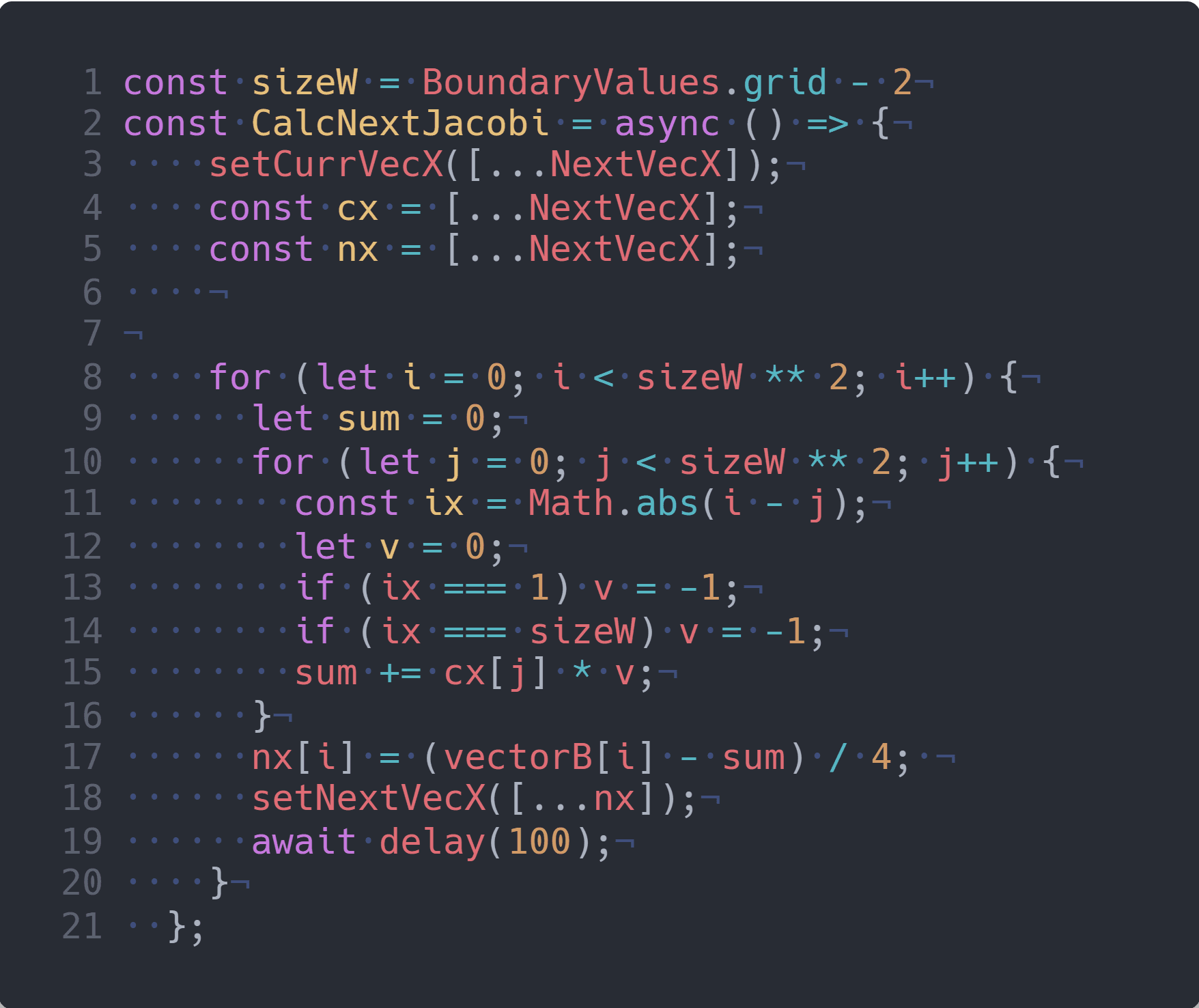

Método de Jacobi

Convergencia

M. de Gauss-Seidel

Convergencia

;

Página tipo blog en el que voy a publicar mis notas de aprendizaje, en especial de temas como matemáticas, física y quizá algo de programación

...